What are the Uses and Benefits of AI in Public Sector?

Applications of AI in public sector are growing in leaps and bounds. Traffic management, healthcare delivery, processing tax forms, citizen safety—you name it! AI is increasing the efficiency, effectiveness, and agility of public services. Yet, achieving its true potential requires strategic vision, specialist skills, and technical investment.

In a survey of public sector leaders, 63% of respondents worried that Gen AI would weaken trust in public agencies. It shows why public sector leaders need to take a cautious approach to rolling out AI. As more public servants use AI tools to do their jobs, government entities can’t postpone the adoption for a long time. What are the risks of using AI in public sector? How can public agencies balance the benefits of AI against the perceived risks? And how can leaders get started with AI to improve public services? This blog is your handy guide to all these burning questions and more! Read on.

What are the Risks of Using AI in the Public Sector?

The use of AI in public sector often raises questions about privacy, security, ethics, and bias in data. Some of the potential risks of AI in the public sector are:

1. Data Security and Privacy

Public sector organizations collect and handle a vast quantity of personal information. Using AI to process, analyze, and make decisions from this data raises significant privacy concerns. Public institutions and governments need to be aware of how their use of AI could impact citizen privacy.

2. Accountability

The use of machine learning and AI models helps automate decision-making. However, machine-made (software-led) decisions may result in bias. Robust policies are critical to ensure decision accountability and govern the use of AI applications in the public sector.

3. Trust

When using AI to automate administrative tasks or for predictive analytics, public institutions should take proper measures to safeguard citizen data from potential misuse. If data collected for one purpose is used for another, it could quickly erode public trust. The spread of misinformation could also influence elections.

4. Misleading Outputs

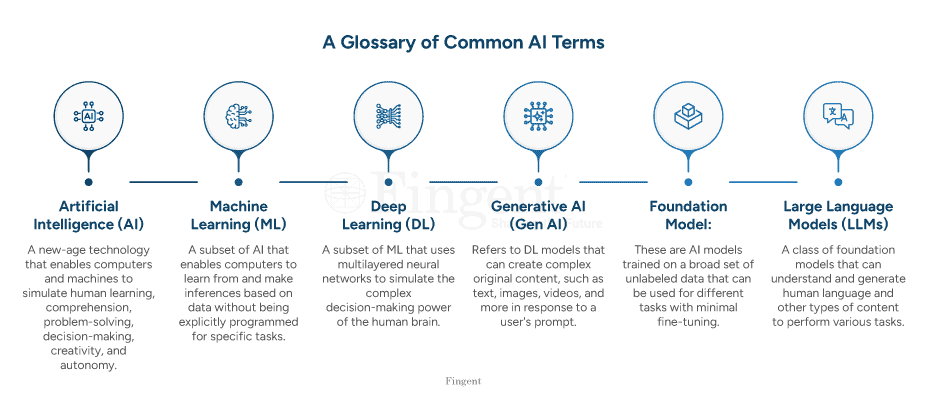

Generative AI training models require large datasets (Large Language Models). Errors in training methodology and incomplete or inaccurate datasets can cause LLMs to generate incorrect, misguiding, or even illogical outputs, triggering high risks for public service agencies.

5. Bias

AI learns statistical patterns from training data to generate human-like language. If the training data contains discriminatory or generalized content, it could lead to the reinforcement of those biases in language generation and output.

6. Lack of Regulations

Confidential data citizens share with LLM providers through user prompts may incur the risk of privacy violation. This particular risk demands that governments enforce appropriate legal and regulatory frameworks for governing (or restricting) Gen AI tools by public servants.

7. Vendor Lock-in

Public sector leaders should know the risk of vendor lock-in when choosing AI products and services. As LLMs get increasingly commoditized, public entities need to carefully choose vendors whose AI models outperform more sophisticated, general-purpose models in particular domains and tasks.

Leverage AI Risk-Free Custom Build AI Solutions That Cater To Your Unique Business Needs

How to Tackle AI Risks in the Public Sector?

Public agencies and governments worldwide should take measures to combat the risks of using AI in public sector.

What Governments Need to Do?

Government departments should create frameworks, regulations, and policies for using AI. Some of the incumbent AI-related regulations are:

- The European Union’s AI Act: This act is the first-ever legal framework for AI from a major regulator. It provides clear requirements for the use of AI. The act includes prohibitions against cognitive behavioral manipulation, biometric categorization, and social scoring.

- California’s AI Accountability Act: This act attempts to create guardrails for state agencies’ use of AI, including notifying citizens when interacting with AI.

- Canada’s Artificial Intelligence and Data Act: The AIDA Act, tabled as Part of Bill C-27, aims to implement measures to detect and curb the risks of individual and collective harm and biased output.

What Public Sector Organizations Need to Do?

Along with government regulations, individual public agencies should also build self guardrails to use AI ethically and responsibly within their unique business context. Here are a few suggestions for public sector organizations to overcome the risks of AI usage:

- Ensure human oversight in AI decision-making that involves life-critical decisions. Human intervention can provide context in scenarios where errors or biases could lead to severe consequences.

- Train AI models with business data and context. Provide access to multiple models so it becomes easy to apply the right model to a given domain or task.

- To mitigate risks at different stages of model development, apply techniques such as anonymization, advanced prompt design, and output-based fine-tuning.

- Choose software development vendors that prioritize responsibility. Ensure their AI development standards and policies address security, privacy, bias, and other ethical concerns.

- Research shows that model behavior can quickly change in a short time. It underscores the need for continuous monitoring of LLMs.

Governments and public sector organizations should:

- Test potential Gen AI use cases at low risk before deciding to scale the use of the technology.

- Be transparent with the public about what, when, and how AI tools are being used. This will help build and retain trust in the technology.

How to Get Started With AI in the Public Sector?

The ideal way to get started is to focus on the low-hanging fruit first and later build on that success. A typical example will be automating repetitive admin tasks such as data entry and record-keeping. Automation doesn’t mean replacing public servants but rather freeing them up to perform more valuable tasks.

Best Practices for Getting Started With AI in Public Sector

- Find out how AI can add value and improve efficiencies for your core processes. Experiment with pre-configured AI scenarios delivered as part of your business applications.

- Start small with a pilot project that focuses on low-hanging fruit. Build on its success by gradually scaling and expanding your AI footprint. Extend your core business processes with available AI services.

- Build your own AI models. Create suitable training datasets by testing different competing algorithms on the data. Evaluate how the models perform against various criteria, including ethical considerations.

- Convert tested AI services and models into usable scenarios. Integrate the proof-of-concepts with your existing software ecosystem and make the essential changes to see AI in action.

- ‘Model drift’ or behavioral change is a common issue in Gen AI models. It’s important to continuously monitor and review outputs to avoid biases that could creep into models almost unknowingly.

- Allow human talent to review and validate AI-generated outputs. Public servants should be well-trained to understand how to properly review LLMs.

Going forward, public institutions will need to migrate from legacy IT architecture to modern cloud-based platforms. That way, they can handle the sheer volume and scale of model training.

Different Approaches for Getting Started With AI

- Iterative Approach: Boston Consulting Group recommends an iterative approach to strategically implement AI. It focuses on experimentation and ongoing capability development, based on an organization’s AI maturity.

- Extensions Approach: Public sector organizations can start by experimenting with AI to digitize and automate processes in line with existing business applications.

- Embedded Approach: Organizations can embed AI in next-gen business applications to boost workplace productivity, optimize resources, and improve decision-making.

- Designing a Customized Approach: There is no one-size-fits-all approach for implementing AI in the public sector. Organizations need to consider a mix of AI capabilities to meet their diverse needs. Access to multiple AI models enables developers to apply the right model to the right task.

Discover AI Solutions That Match Your Business Needs

What are the Common Use Cases for AI in Public Sector?

Here are four use cases showing how public agencies are harnessing the power of AI to improve organizational efficiency and drive digital government transformation:

1. AI in Citizen Services

In many countries, public institutions are using AI to offer agile, personalized, and inclusive services to citizens. Few examples include:

- AI chatbots provide citizens with 24/7 assistance and information

- Online forums and surveys used by governments to get feedback on public services

- AI-based automated engagement platforms that help governments analyze public sentiment across social media and make informed decisions

- Using Gen AI tools to analyze public information for currency and accuracy

2. AI in Policy and Planning

AI has applications across each stage of policymaking, including identification, formulation, adoption, implementation, and evaluation.

- Identification: AI tools can rapidly synthesize large amounts of data and detect patterns. Machine learning can generate insights in near real time, allowing public sector leaders to take swift action.

- Formulation: Governments need to routinely forecast the anticipated costs, benefits, and outcomes of policy options. AI can speed up this process by providing quick insights.

- Adoption: Insights generated using AI allows regulators and lawmakers to forecast a policy’s potential impact. It helps them make more informed decisions.

- Implementation: Automation and near real-time feedback analysis powered by AI help policies to get implemented faster.

- Evaluation: AI tools can speed up assessment of things that need to change in a policy. Insights provided by AI allow government officials to identify where a policy could be falling short or subject to fraud.

3. AI in Finance

AI helps increase performance across a range of financial activities from monitoring funds and grants to managing invoices, payments, auditing, forecasting, and more.

- AI tools can quickly analyze financial reports in high volumes and flag anomalies in invoices, payments, and more.

- Financial AI applications can help with property assessments. They can also detect discrepancies in the usage of grant money and funds.

- Gen AI tools can simplify budget-variance analysis and facilitate audits.

- AI is also used in detecting and preventing financial frauds and money laundering.

4. AI Copilots

AI Copilots are intelligent virtual (conversational) assistants that can be integrated with a wide range of business systems. In the public sector, AI Copilots can be used for:

- Improving response times and accuracy in addressing citizen inquiries

- Making evidence-based policy recommendations

- Budget planning and resource distribution

- Streamlining project management

- Training and knowledge sharing

How Fingent Can Help With AI Development?

There is no doubt that Gen AI will augment human functions, freeing workers from mind-numbing tasks. As public institutions strive to develop competencies in AI, leaders should:

- Address any concerns the public service workforce may have about AI

- Impart foundational training on using Gen AI

- Offer upskilling or reskilling opportunities

As a top custom AI software development company, Fingent remain committed to ensuring the ethical and responsible use of AI. Together, we can harness the power of AI to drive innovation in the public sector. Want to see how AI can transform your agency’s operations? Contact our experts today and see how you can embrace AI more meaningfully.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new